PROTOSYNTHETIC

When the Interview Question Becomes the Product

How I turned an open-ended design challenge into an AI-powered requirements gathering system that generates design artifacts

Role: Solo Designer & Developer

Timeline: 3 weeks (concept to working prototype)

Stack: React, Node.js, OpenAI API, TailwindCSS, DaisyUI

At a Glance

An AI-powered meta-design tool that transforms open-ended design challenges into systematic requirements gathering. Instead of answering 'design a 1000-floor elevator,' Protosynthetic built a system that asks all the right questions—generating branching question trees, extracting answers from knowledge bases, and synthesizing requirements automatically.

THE CHALLENGE

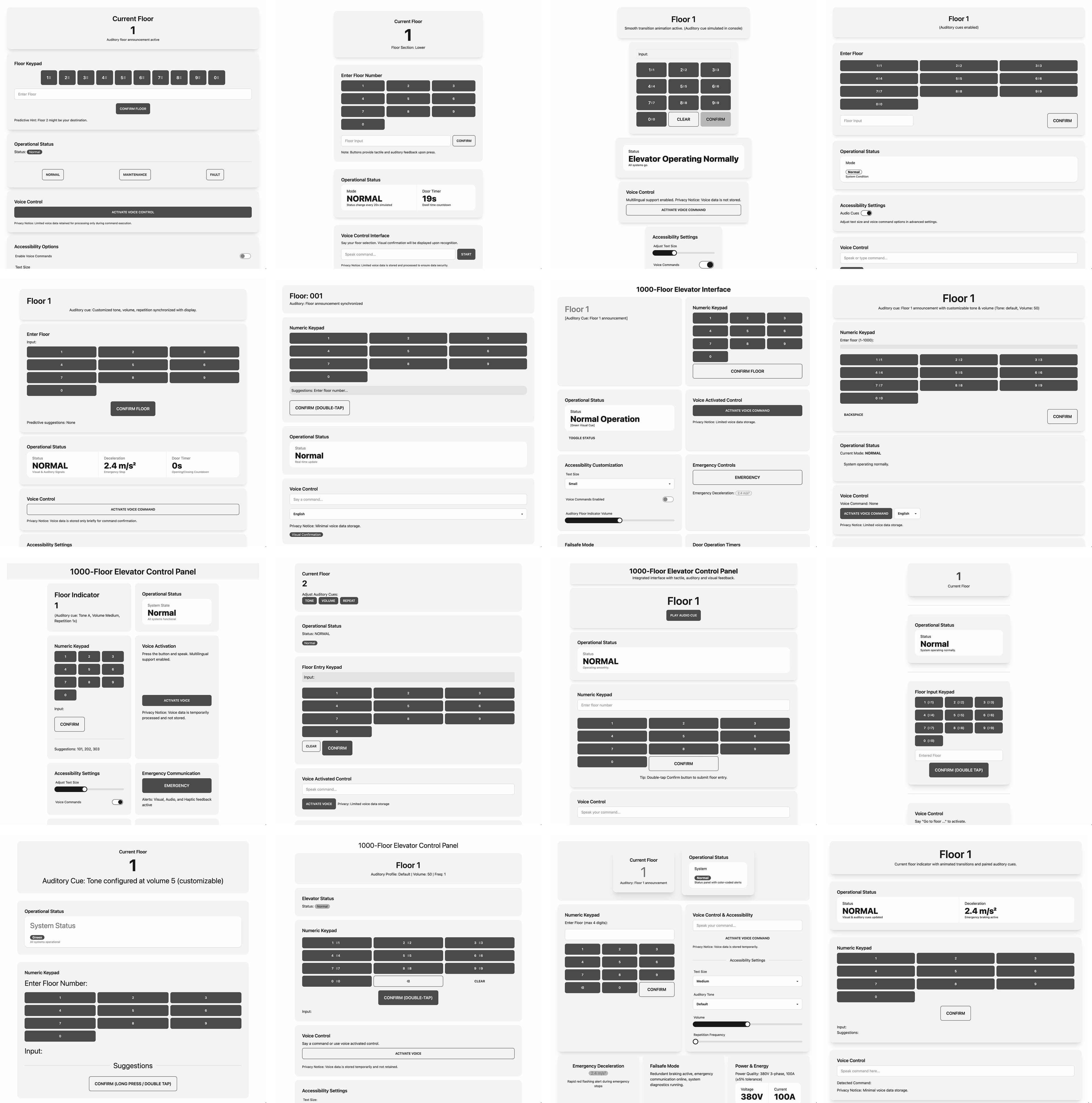

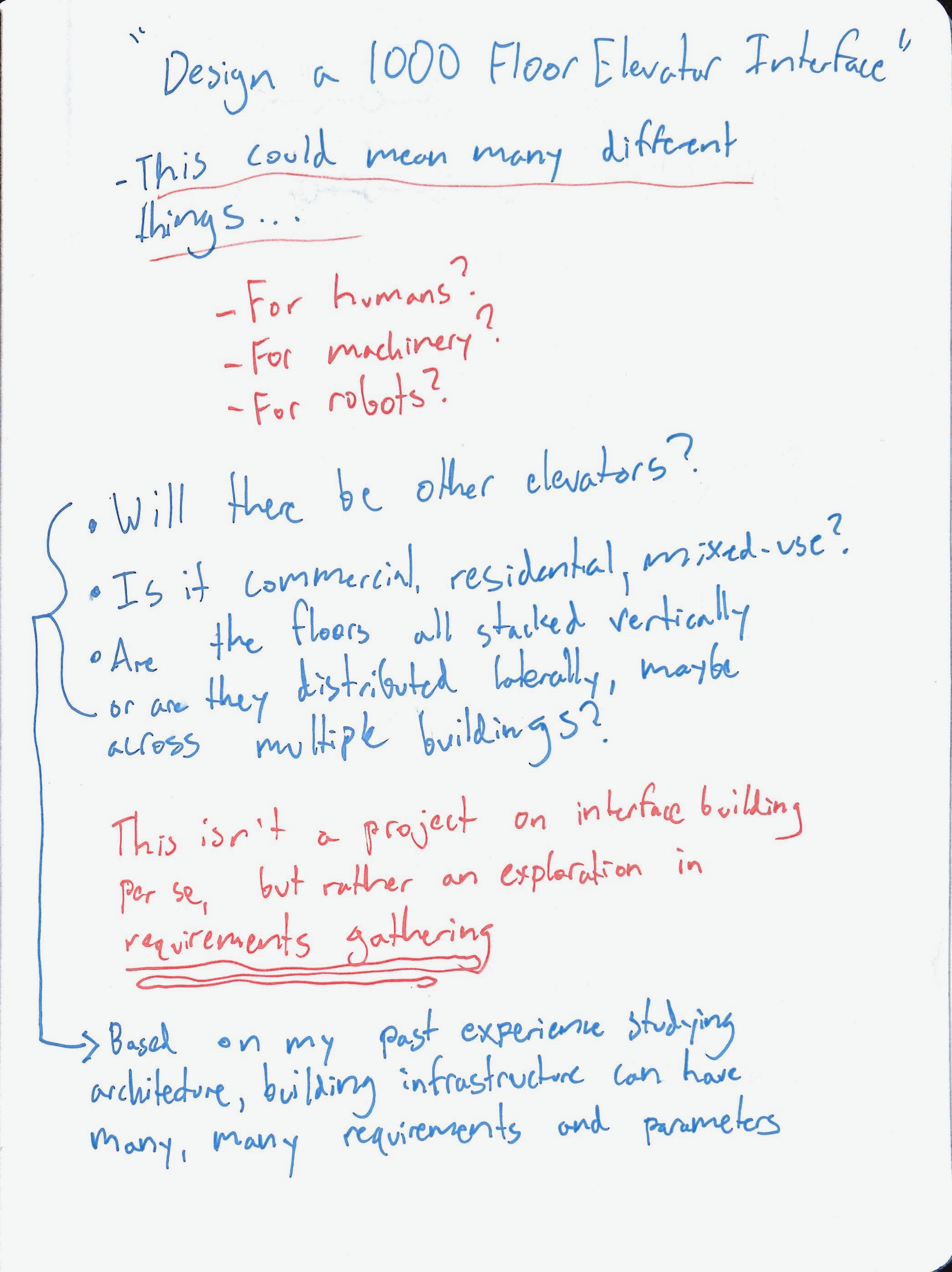

During an interview process, I received a design task:

"Design the interface for a 1000-floor elevator."

Simple question. Impossible to answer well without more information.

Who uses this elevator? What building type? What constraints exist? What problems are we actually solving? The question was deliberately vague - a test of how I'd handle ambiguity.

I could have made assumptions and designed something generic. Instead, I built a tool that systematically eliminates assumptions through AI-powered requirements gathering.

THE APPROACH

Rather than answer the question, I created a system that helps anyone answer similar open-ended design challenges:

- AI asks progressively tailored questions about user needs and constraints

- Branching question tree explores both breadth and depth of requirements

- Knowledge base integration suggests answers from existing documentation

- Requirements automatically distilled from accumulated Q&A

- Mockups generated on demand at any point in the exploration

The result: a meta-design tool that turns requirements gathering into an interactive, AI-guided conversation.

RESEARCH: WHY THIS QUESTION IS HARD

LEARNING FROM OTHERS

Before jumping into design, I did what any good designer should: research.

I looked for how others approached this classic interview question. What I found was unanimous: everyone emphasized that any definitive answer would require making assumptions that might not be true.

The simplicity of the question was the problem. Without understanding the users, their needs, the building context, accessibility requirements, traffic patterns, or technical constraints, you're just guessing.

From my architecture studies, I knew this intimately. So many parameters influence a building and its interior systems. Elevators aren't just boxes with buttons - they're complex systems shaped by human behavior, building codes, structural limitations, and usage patterns.

I could list assumptions and design accordingly. But that felt like missing the deeper challenge.

Online discussions revealed the core problem: the question is deliberately vague. Any solution requires assumptions about users, context, and constraints that may or may not be valid.

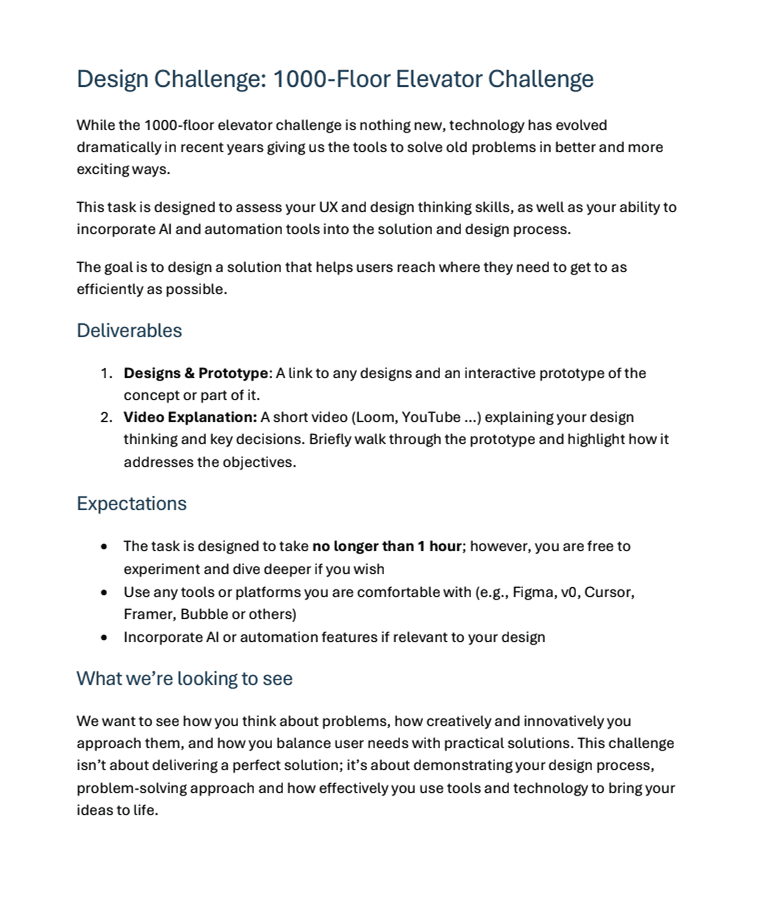

THE ASSIGNMENT

"Design the interface for a 1000-floor elevator."

That's it. No additional context. No user research. No requirements document. Just 11 words and a blank canvas.

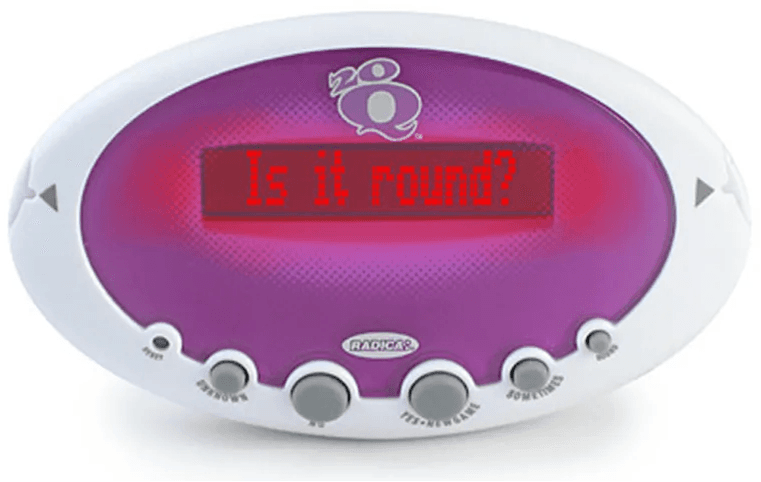

FROM CHILDHOOD GAMES TO PROFESSIONAL TOOLS

THE 20 QUESTIONS BREAKTHROUGH

Then I remembered something from childhood: a handheld 20 Questions game. You'd think of any object, and through a series of progressively tailored yes/no questions, the device would remarkably pinpoint what you had in mind.

The magic wasn't in the answers - it was in the questions themselves. Each question built on previous responses, systematically narrowing possibilities while exploring relevant dimensions.

What if I could create a professional version of this for design requirements?

Instead of me making assumptions about the elevator, what if an AI asked questions about what the stakeholder wanted to build? Questions that built on each other. Questions that made you think through considerations you might not have considered.

Turn requirements gathering into a systematic, AI-guided exploration.

The 20 Questions handheld game used progressive questioning to narrow down infinite possibilities. Could this same principle apply to design requirements?

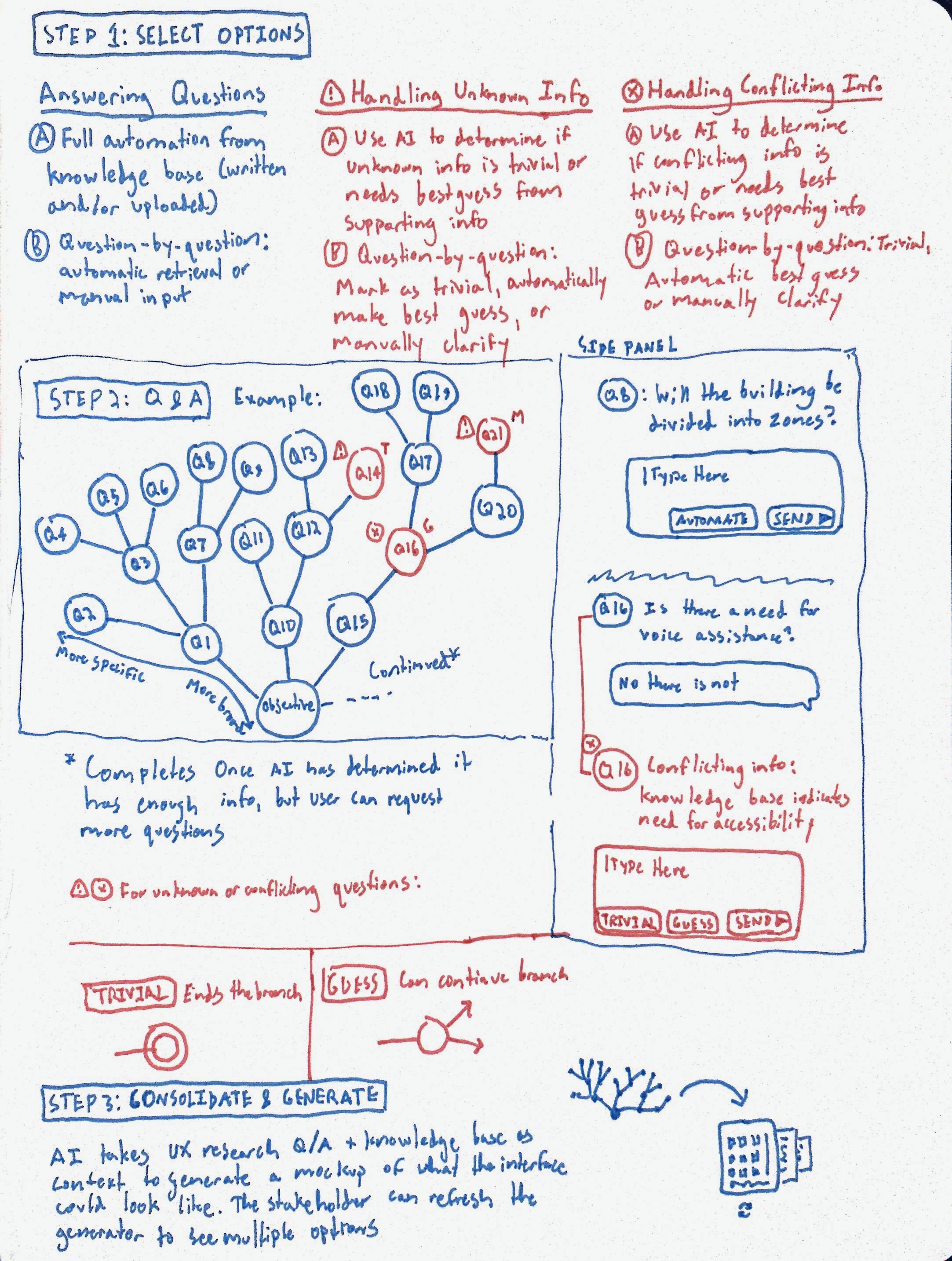

THE CORE CONCEPT

Traditional requirements gathering is linear: stakeholder describes needs, designer asks clarifying questions, document gets written.

This approach is different: the AI becomes the interviewer, systematically exploring the problem space through a branching question tree. Each answer spawns new questions. The tree structure ensures both breadth (covering all major considerations) and depth (diving into specifics where needed).

At any point, generate mockups based on the accumulated requirements. Keep exploring, generate again. The requirements evolve, the designs evolve.

Requirements gathering becomes ideation.

Early sketch exploring how AI-guided questioning could systematically surface design requirements without forcing assumptions

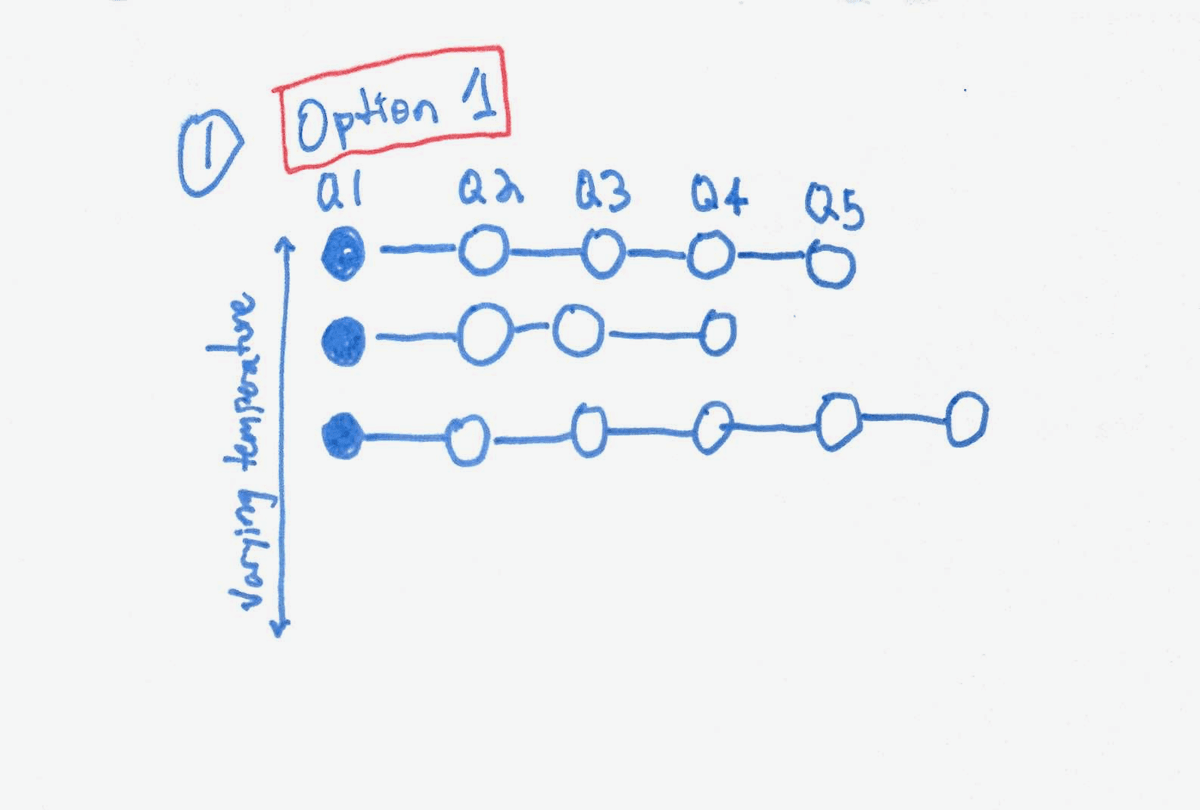

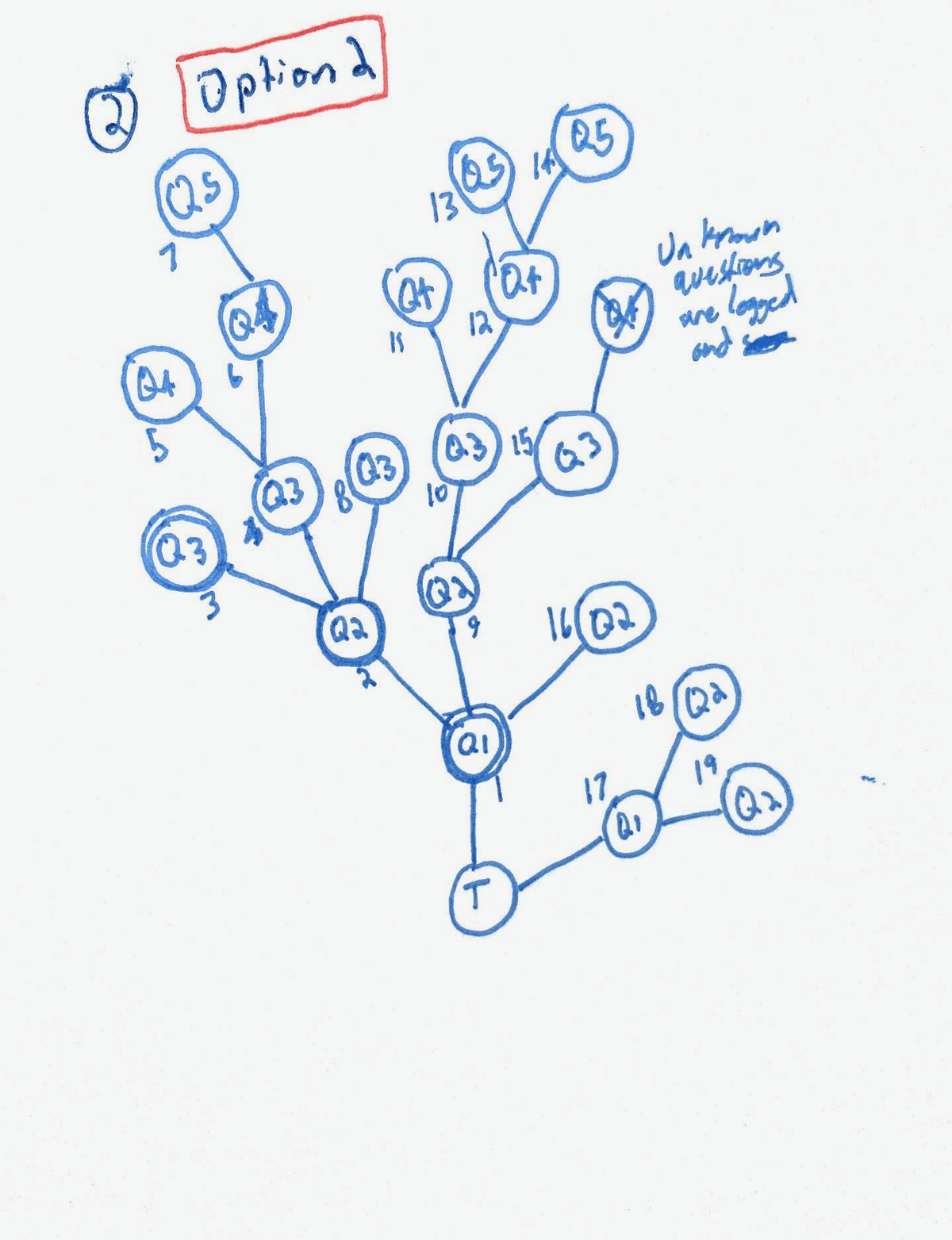

TWO APPROACHES: PARALLEL VS TREE

DECIDING HOW THE AI SHOULD THINK

With the core concept defined, I needed to figure out how the AI would structure its questioning. Two architectures emerged:

Parallel Sequences

Pros:

- Diversified exploration

- Different temperature levels

- Independent progression

Cons:

- Siloed questioning

- Missed interconnections

- Harder to see relationships

- Doesn't reflect unified solution

Branching Tree

✓ SELECTED

Pros:

- Breadth and depth simultaneously

- Contextually connected

- Explores relationships

- Unified solution space

- Visual structure makes sense

Cons:

- More complex to implement

- Requires careful prompt engineering

Option 2's tree structure better reflected how design considerations interconnect. Every question relates to the central problem while exploring distinct angles.

The Complete System Design

Comprehensive sketch showing the full system: tree visualization on the left, chat interface on the right, with automation features and mockup generation. This became the implementation blueprint.

BUILDING THE SYSTEM

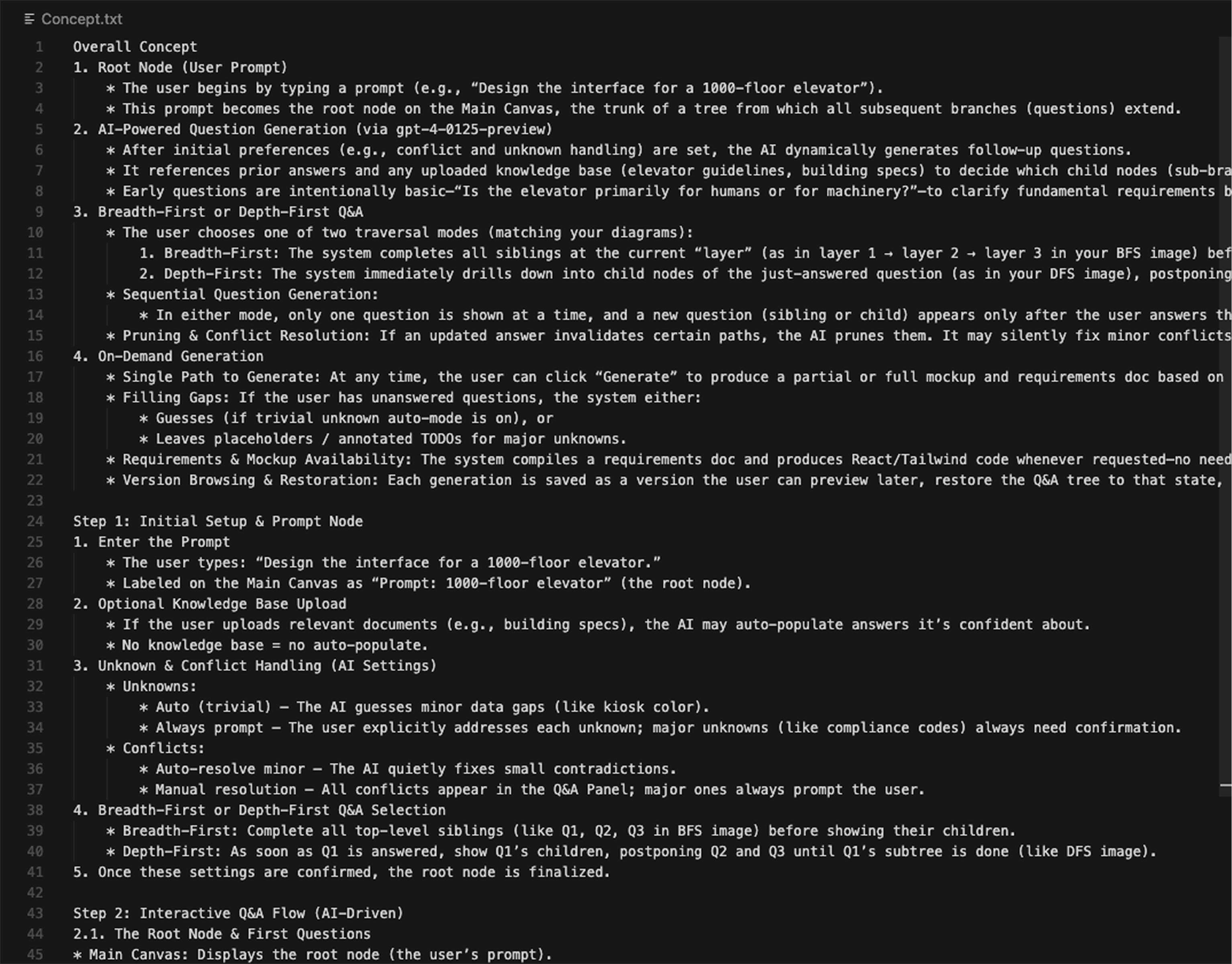

FROM SKETCHES TO SPECIFICATION

With the architecture decided, I needed a comprehensive plan. I put together a detailed specification document outlining all functionality, data structures, API endpoints, and user flows.

The process was iterative - sketching, prompting an LLM to pressure-test the plan, refining, repeating. The goal was to ensure the specification was both comprehensive and executable before writing any code.

This upfront planning paid off. The implementation went smoothly because the hard architectural decisions were already made.

Comprehensive specification document covering system architecture, data models, API design, and user flows. Created through iterative refinement with LLM feedback to ensure completeness.

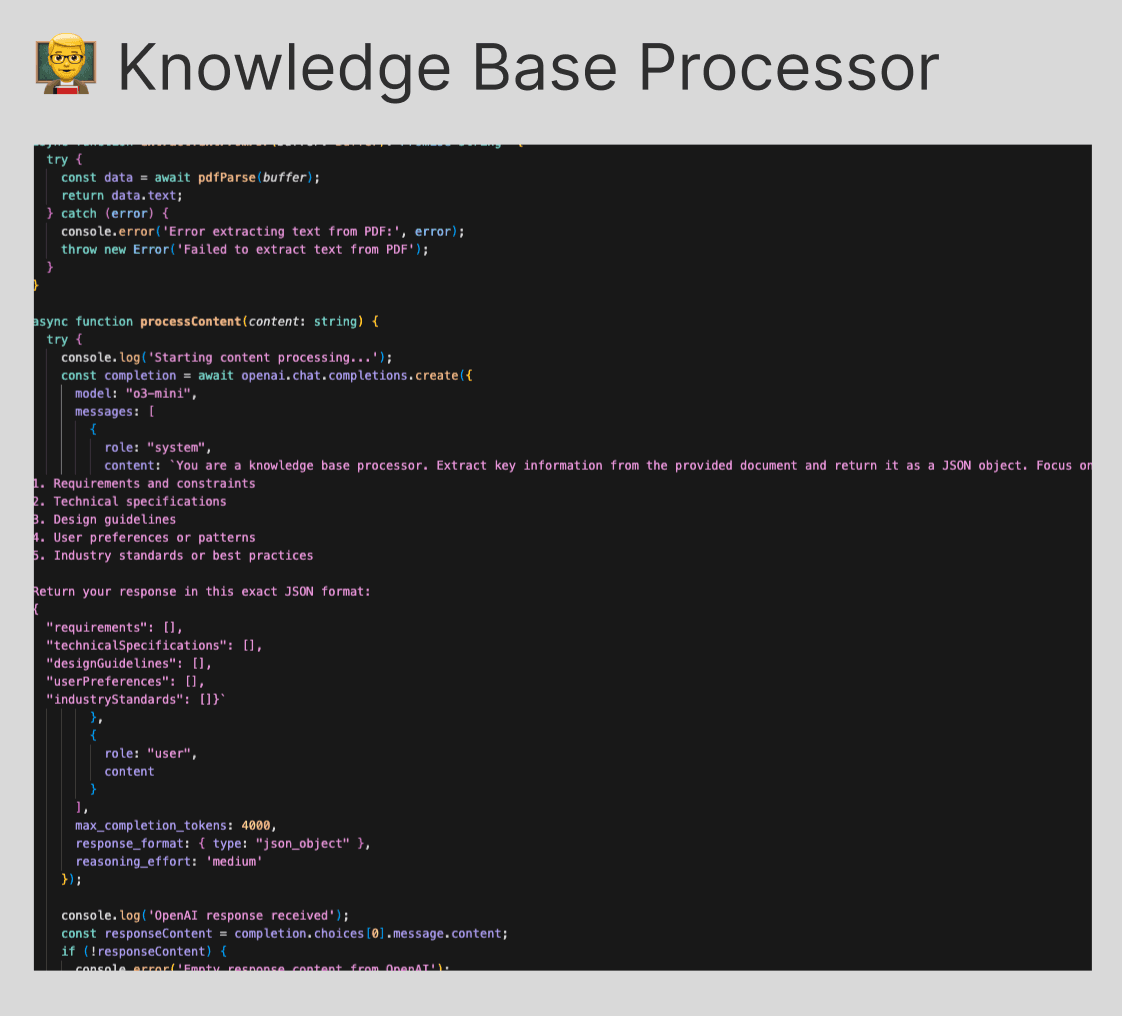

MULTI-AGENT ARCHITECTURE

The system required four distinct AI agent roles, each with specific responsibilities and prompt engineering:

Takes uploaded documents or entered text at project initialization and distills them into a unified knowledge corpus. This corpus becomes the source for suggesting answers to questions throughout the session.

Responsibilities:

- Parse and chunk uploaded documents

- Extract key facts, requirements, constraints

- Create searchable knowledge base

- Maintain semantic understanding for retrieval

Four specialized AI agents handle distinct aspects of the system: knowledge processing, question generation, requirements synthesis, and mockup creation.

SEEING IT IN ACTION

FROM CONCEPT TO WORKING TOOL

After three weeks of design and development, Protosynthetic was ready. The system works in four stages:

- SETUP: Define the project and optionally upload knowledge materials

- EXPLORATION: Answer questions as the AI builds a requirement tree

- SYNTHESIS: Requirements automatically documented from accumulated answers

- GENERATION: Create mockups on demand, iterate, regenerate

Let me walk you through each stage.

STAGE 1: PROJECT INITIALIZATION

Every session starts with context. Describe what you're building, upload any relevant documents (RFPs, specs, research), and choose your exploration strategy (breadth-first or depth-first). The knowledge base processor immediately goes to work, distilling uploaded materials into a searchable corpus.

Project initialization: describe what you're building, upload knowledge materials, and select exploration strategy. The system processes everything before generating the first questions.

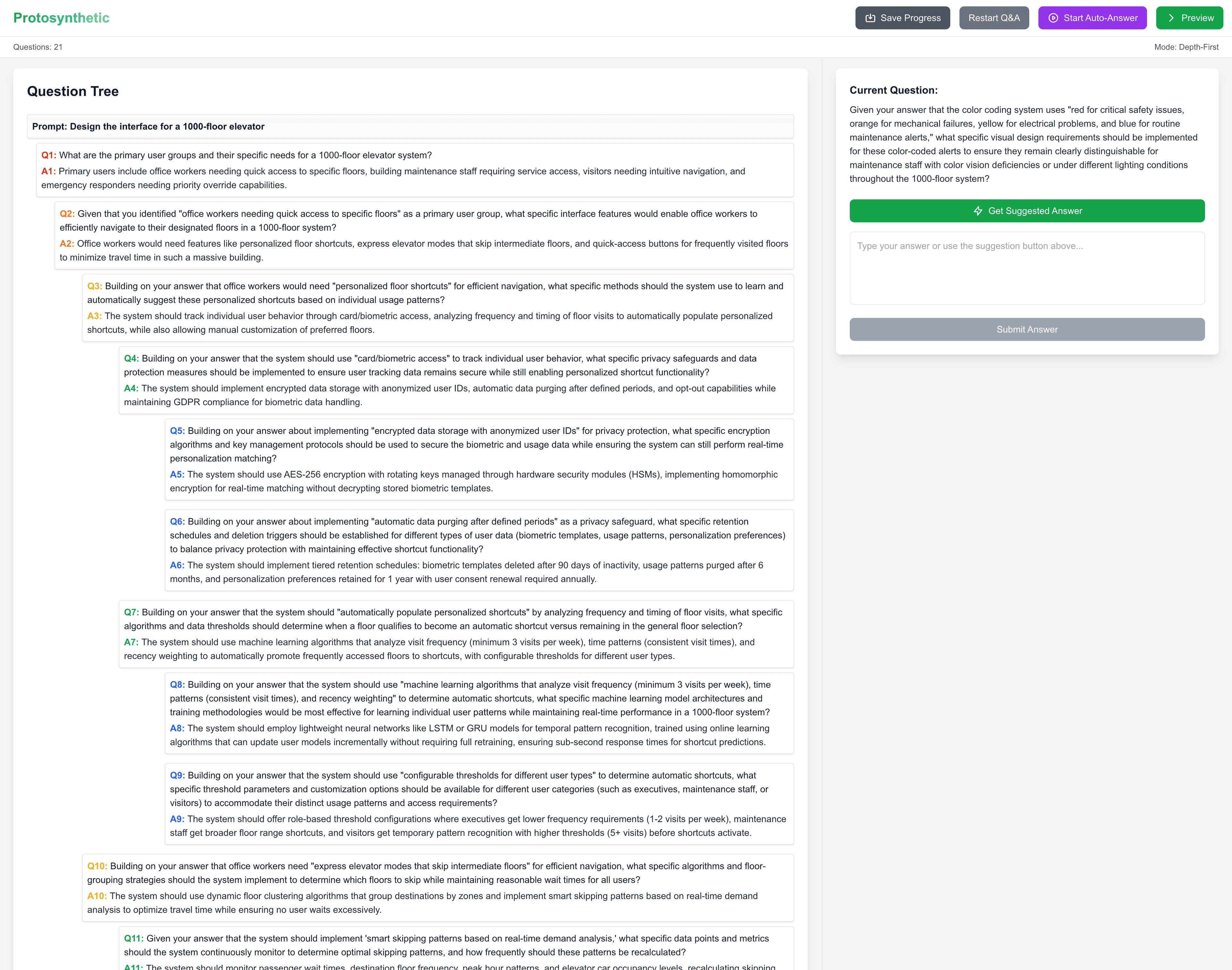

STAGE 2: SYSTEMATIC EXPLORATION

The core of Protosynthetic is the Q&A interface. Tree visualization on the left shows the evolving question structure. Chat interface on the right lets you answer the current question.

Three ways to answer each question:

Method 1: Manual Entry

Type your own answers for complete control. Best when you have specific requirements or want to think through answers carefully.

Method 2: AI-Suggested Answers

Let the AI suggest answers based on your knowledge base. Extracted answers are editable before submission. Only available if you uploaded materials during setup.

Method 3: Full Automation

Auto-Answer mode continuously generates and submits AI-suggested answers without user intervention. Rapidly builds out the requirement tree. Can be stopped at any point. Only available with knowledge base.

BREADTH-FIRST VS DEPTH-FIRST

The two exploration modes produce noticeably different question trees:

Breadth-First Exploration

Breadth-First mode systematically covers high-level considerations across the problem space before diving into specifics. Notice how the tree grows wide first, then deep - ensuring major aspects aren't missed.

Four top-level questions generated first. Then three follow-ups per question. Then three follow-ups for each of those. Systematic coverage.

Depth-First Exploration

Depth-First mode prioritizes exploring follow-up questions to maximum depth (5 levels) before backtracking to cover sibling branches. Better for projects needing detailed requirement exploration quickly.

Goes deep on one branch first, exploring nuances and edge cases. Then backtracks to explore alternative angles. Prioritizes specificity.

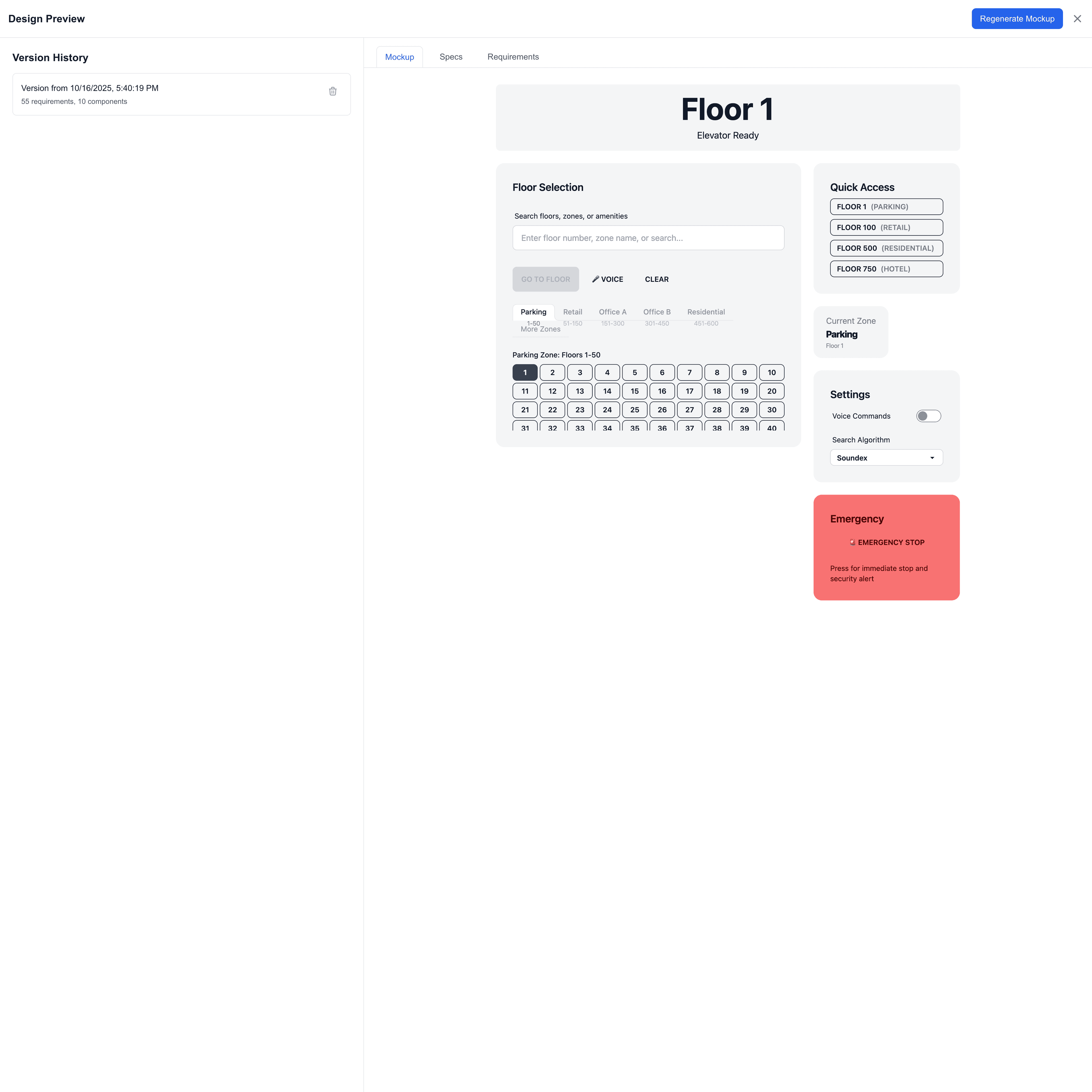

STAGES 3 & 4: SYNTHESIS AND GENERATION

At any point during exploration - after 5 questions or 50 - you can generate mockups. The UX Researcher agent synthesizes all answers into a requirements document. The UX Wireframe Designer agent creates working mockups based on those requirements. Generate once. Answer more questions. Generate again.

Iterative Mockup Generation

Generate mockups at any point. As you answer more questions and requirements become more detailed, regenerate to see designs evolve. Each generation incorporates all accumulated context.

THREE VIEWS OF THE SOLUTION

Each generation produces three outputs:

The Mockup tab shows the live, interactive design. Built with React, Tailwind, and DaisyUI - not static images. You can interact with the UI, test flows, and see real component behavior.

FROM ONE QUESTION TO INFINITE SOLUTIONS

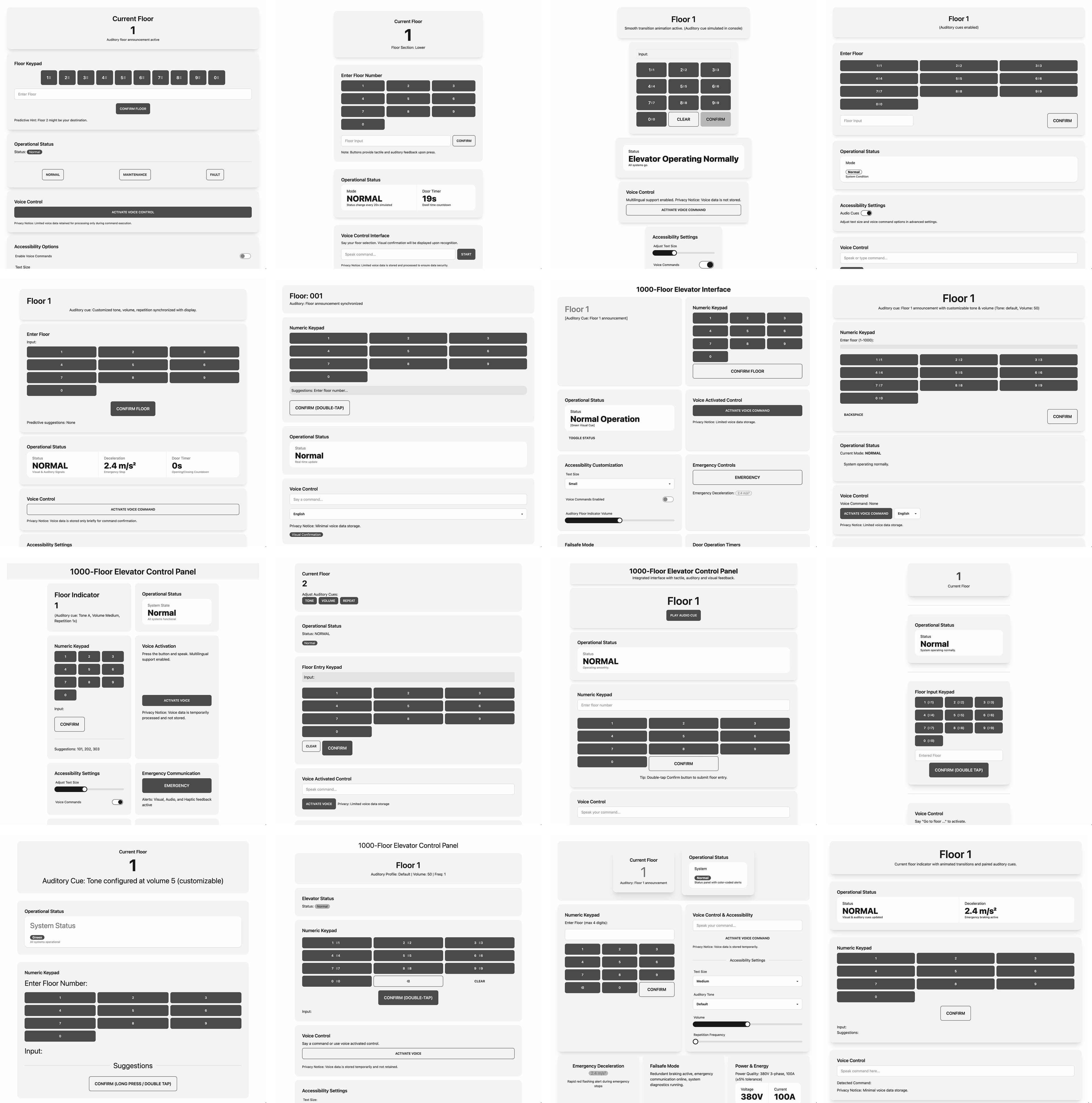

Here's what emerged from systematically exploring the original interview question: "Design the interface for a 1000-floor elevator." The system didn't give me one answer. It gave me a framework for generating infinite answers, each tailored to specific contexts, users, and constraints discovered through AI-guided questioning.

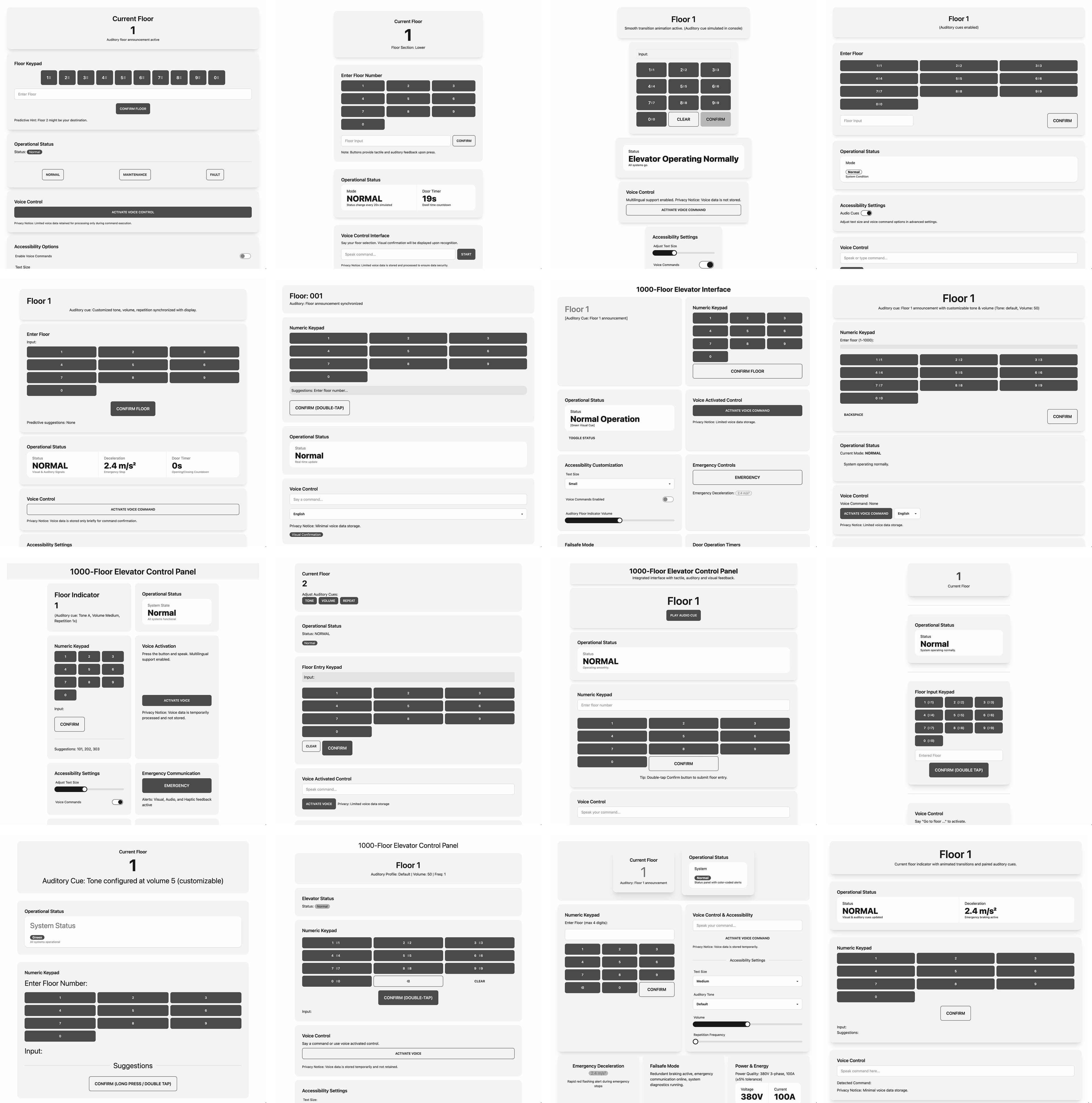

Mockups generated through iterative questioning, each revealing different design directions based on user answers. The variety demonstrates how systematic exploration uncovers nuances that assumptions would miss.

WHAT THIS PROJECT TAUGHT ME

LESSONS FROM META-DESIGN

QUESTIONING THE QUESTION

The original interview question was testing how I'd handle ambiguity. Most candidates would ask a few clarifying questions, make assumptions, and design something reasonable. I took a different approach: instead of asking for clarification, I built a system that systematically generates all the clarifying questions. The tool became more valuable than any single answer.

AI AS COLLABORATOR, NOT REPLACEMENT

Protosynthetic doesn't replace designers - it makes them better. The AI asks questions designers should be asking but might not think of. It surfaces considerations that get missed in traditional requirements gathering. The human is still central: deciding which answers matter, when to generate mockups, which directions to explore further. The AI accelerates and systematizes, but doesn't replace judgment.

TOOLS THAT THINK

Most design tools are passive: Figma, Sketch, Adobe XD. They do what you tell them. Protosynthetic is active: it asks, suggests, explores, generates. This shift - from tools that execute to tools that collaborate - is where AI's real potential lies in design. Not replacing designers, but making design processes smarter.

REQUIREMENTS AS EXPLORATION

Traditional requirements gathering is treated as a necessary evil before "real design" begins. Protosynthetic reframes it as design itself. The question tree is a design artifact. The branching structure visualizes the problem space. The act of answering questions is ideation. Requirements and design happen simultaneously.

ONE QUESTION, INFINITE CONTEXTS

The original question - "design a 1000-floor elevator" - has no single right answer. A residential building needs different solutions than a hotel, office tower, or mixed-use complex. Users with mobility needs require different considerations than freight operations. Protosynthetic embraces this. Instead of forcing one solution, it generates the framework for exploring all solutions. The tool is the answer.

HOW IT'S BUILT

STACK AND STRUCTURE

FRONTEND: React + Next.js + TailwindCSS + DaisyUI

- Tree visualization using custom recursive components

- Real-time chat interface with streaming AI responses

- Split-panel layout with resizable sections

- Mockup rendering with live React component preview

BACKEND: Next.js 15 (App Router)

- API Routes for agent orchestration (/app/api/*)

- Session management for multi-turn conversations

- Knowledge base processing and chunking

- Prompt template management for different agent roles

AI LAYER: Anthropic Claude API

- Claude Sonnet 4 (claude-sonnet-4-20250514) for all generation tasks

- Question generation and answer suggestions

- Requirements document synthesis

- UI mockup code generation

- Temperature tuning per agent type (0.3-0.7 range)

- Context window management for long conversations

DATA STRUCTURES:

- Tree structure storing Q&A with parent/child relationships

- Knowledge base as processed content with categories

- Requirements document as structured JSON

- Mockup specifications as executable code + metadata

GENERATION PIPELINE:

- User answers question → stored in tree

- UX Researcher synthesizes all answers → requirements doc

- UX Wireframe Designer receives requirements → generates code

- Code executed and rendered → live interactive mockup

- Specs and next steps documented automatically

TRY IT YOURSELF

Protosynthetic is live and the code is open source.

Full Project Walkthrough

Full demonstration showing Protosynthetic in action: from project setup through question exploration to mockup generation. This 10-minute walkthrough covers all features and demonstrates both exploration modes.